Fail in file robots.txt

I want to share with you a bug that I have decided, however, the problem is an interesting. The problem was discovered through a special tool site analysis. Bug was in robots.txt.

On my site was planned the section for search engine heroes.

Well, because the section is not created, I closed all the pages on your site from being indexed. I prescribed all pages in the robots.txt file.

Link to robots.txt — https://seoheronews.com/robots.txt.

So I saved the crawl budget for my website. My is new and poorly indexed. However, in a file with the guidelines for indexation robots.txt I disallow for indexing page with the profile of the ex-employee of a search engine from Google.

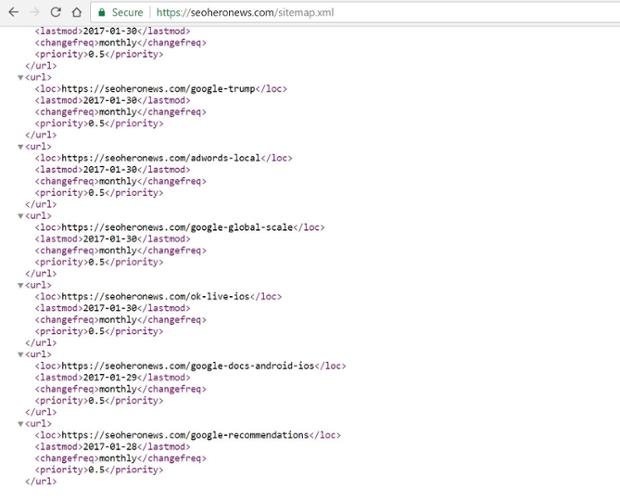

Code

Disallow: /matt-cutts

But the news about employee departure from the search engine Google coincided partly by URL.

URL /matt-cutts-google

Link to sitemap — https://seoheronews.com/sitemap-image.xml.

This case I did not realize.

And it page was in Google's search systems database but was disallow to indexation. I did not change the complex mechanism of create up a robots.txt file. I just changed the URL for page on site.