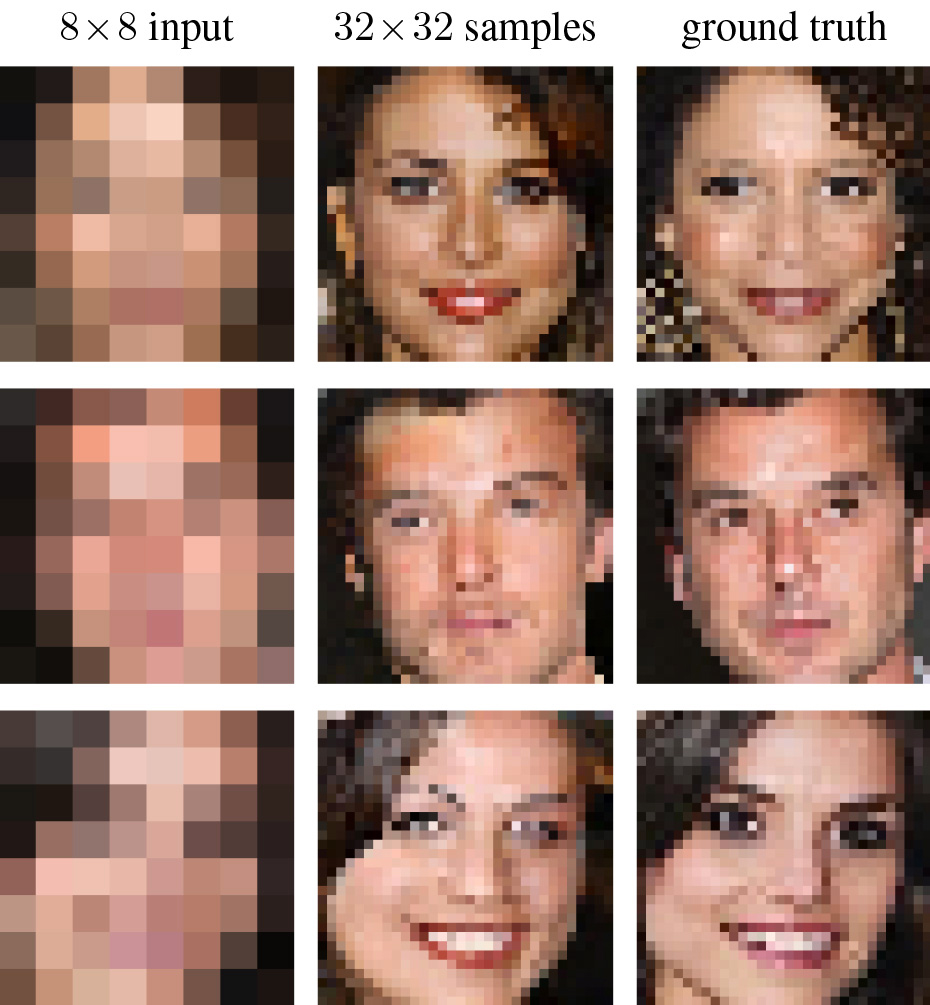

Google's neural network is trained to recreate photos by 64 pixels

Team research project Google Brain has created a neural network that is able to synthesize on the basis of the source code in a 64 pixel pictures with higher resolution.

Artificial intelligence tries to guess what could be the original image if it was reduced to 64 pixels. That is it synthesizes a photo that could be the source image. Improve the photo it can not.

This process is organized in two stages. First use of standard neural network (network condition), which compares the image size of 8x8 pixels with similar images in a higher resolution, which have been reduced. Thus check the overall patterns and colors.

In the second stage the neural network is activated prior network, which uses details of high resolution images, to fill in low resolution images.

Then, the images generated by both neural networks are combined to create the most probable version of the original image.

Google Brain argue that in this way, the system can generate an image resolution of 32×32, similar to the original.

Left image is the picture obtained by the neural network; in the center of the synthesized picture; right image is the original reduced to 32x32 pixels.

Does Google use the new network in the existing services the company not said.